|

UAV and insect visionby Thomas Netter, Aerospace and Robotics engineer [read resumé] A robot that flies with a

|

Download IROS'02 paper [A4],

[US letter] PDF 212Kb

Watch it avoid obstacles: [AVI Video 6

Mbytes]![]()

(Linux users: Codec = Radius Cinepak, viewable with xanim, xine,

mplayer)

Flying insects rely on their panoramic eye and Optic Flow for guidance

and obstacle avoidance. Furthermore, flies bear remarkable neural

fusing of visual, inertial, and aerodynamic senses. Behavioural and

neurophysiological studies of insects have inspired research in

Artificial Intelligence and the construction of many autonomous

robots. This flying robot was built within the Neurocybernetics

Group of the Laboratory of Neurobiology, CNRS, Marseilles, France.

Previous research on the visuo-motor system of the fly had led to the

development of two mobile robots which feature an analogue electronic

vision system based on Elementary Motion Detectors (EMD) derived from

those of the fly.

Flying insects rely on their panoramic eye and Optic Flow for guidance

and obstacle avoidance. Furthermore, flies bear remarkable neural

fusing of visual, inertial, and aerodynamic senses. Behavioural and

neurophysiological studies of insects have inspired research in

Artificial Intelligence and the construction of many autonomous

robots. This flying robot was built within the Neurocybernetics

Group of the Laboratory of Neurobiology, CNRS, Marseilles, France.

Previous research on the visuo-motor system of the fly had led to the

development of two mobile robots which feature an analogue electronic

vision system based on Elementary Motion Detectors (EMD) derived from

those of the fly.

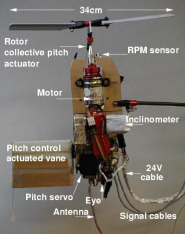

A tethered Unmanned Air Vehicle (UAV), called Fania, was developed to

study Nap-of-the-Earth (NOE) flight (terrain following) and obstacle

avoidance using a motion sensing visual system. After an aerodynamic

study, Fania was custom-built as a miniature (35 cm, 0.840 kg),

electrically-powered, thrust-vectoring rotorcraft. It is constrained

by a whirling-arm to 3 degrees of freedom with pitch and thrust

control.

A tethered Unmanned Air Vehicle (UAV), called Fania, was developed to

study Nap-of-the-Earth (NOE) flight (terrain following) and obstacle

avoidance using a motion sensing visual system. After an aerodynamic

study, Fania was custom-built as a miniature (35 cm, 0.840 kg),

electrically-powered, thrust-vectoring rotorcraft. It is constrained

by a whirling-arm to 3 degrees of freedom with pitch and thrust

control.

The robotic aircraft's 20-photoreceptor onboard eye senses moving contrasts with 19 ground-based neuromorphic EMDs. Visual, inertial, and tachymeter signals from the aircraft are scanned by a data acquisition board in the flight control computer which runs the Real-Time Linux operating system. A weighted average fusion of the visual inputs is used to command thrust. A PID controller regulates pitch. Flight commands are output via the parallel port to a microcontroller interfacing with a standard radio-control model transmitter.

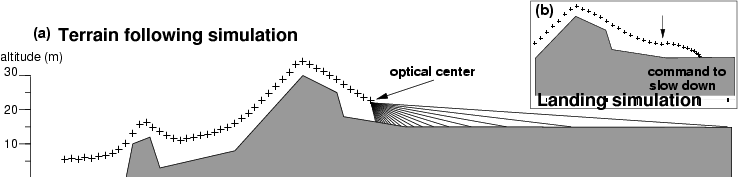

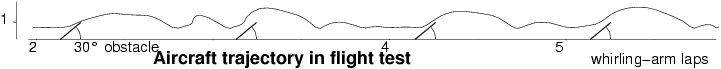

Vision-based terrain following (a) and landing (b) were simulated. Automatic obstacle-avoiding flights at speeds between 2 m/s and 3 m/s were demonstrated within the laboratory. This UAV project is at the intersection of Neurobiology, Robotics, and Aerospace. It provides principles and technology to assist urban operations of Micro Air Vehicles (MAV).

Related projects for flight with insect vision

Biorobotic Vision Laboratory (Srinivasan Lab), ANU, CanberraCenteye, Washington DC

Dickinson Lab, Caltech, Pasadena

Autonomous Systems Lab, EPFL, Lausanne

Artificial Intelligence Lab, University of Zürich

Hans van Hateren's Lab, University of Groningen

Insect vision and motion detection at Institute of Neuroinformatics

Tobi DelbrückGiacomo Indiveri

Shih-Chii Liu

Jörg Kramer